More Information

Submitted: December 09, 2020 | Approved: January 12, 2021 | Published: January 13, 2021

How to cite this article: Sharifnezhad A, Abdollahzadekan M, Shafieian M, Sahafnejad-Mohammadi I. C3D data based on 2-dimensional images from video camera. Ann Biomed Sci Eng. 2021; 5: 001-005.

DOI: 10.29328/journal.abse.1001010

ORCiD: orcid.org/0000-0002-5855-1441

Copyright License: © 2021 Sharifnezhad A, et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Keywords: Musculoskeletal modeling; C3D; Motion analysis; Motion capture; Video-based; Matlab

C3D data based on 2-dimensional images from video camera

Ali Sharifnezhad1*, Mina Abdollahzadekan2, Mehdi Shafieian2 and Iman Sahafnejad-Mohammadi3

1Department of Sport Biomechanics, Sport Science Research Institute, Tehran, Iran

2Department of Biomedical Engineering, Amirkabir University of Technology, Tehran, Iran

3Department of Biomedical Engineering, Science and Research Branch, Azad University, Tehran, Iran

*Address for Correspondence: Dr. Ali Sharifnezhad, Department of Sport Biomechanics and Technology, Sport Sciences Research Institute, No 3, Alley 5, Mir Emad St., Motahari St., Tehran, Iran, Tel: +989120924450; Email: [email protected]; [email protected]

The Human three-dimensional (3D) musculoskeletal model is based on motion analysis methods and can be obtained by particular motion capture systems that export 3D data with coordinate 3D (C3D) format. Unique cameras and specific software are essential for analyzing the data. This equipment is quite expensive, and using them is time-consuming. This research intends to use ordinary video cameras and open source systems to get 3D data and create a C3D format due to these problems. By capturing movements with two video cameras, marker coordination is obtainable using Skill-Spector. To create C3D data from 3D coordinates of the body points, MATLAB functions were used. The subject was captured simultaneously with both the Cortex system and two video cameras during each validation test. The mean correlation coefficient of datasets is 0.7. This method can be used as an alternative method for motion analysis due to a more detailed comparison. The C3D data collection, which we presented in this research, is more accessible and cost-efficient than other systems. In this method, only two cameras have been used.

Analysis of human movement may take place for various purposes, which include recognizing the details of a movement (such as degree of freedom of joints, stride length), diagnosis of motor and musculoskeletal diseases (such as orthopedic or posture disorders), and progressing the performance of athletes or a person who injured [1].

In many cases, the doctor or the analyst observes the movement then their assessment is based on experience. This method may work in some cases, but it will not be useful in quick movements of athletes, and there is always a possibility of error. Nowadays, motion data can be obtained quantitatively, and analyzes are done more accurately by numbers using computers and motion analysis systems [2].

Human body movements are mostly performed on sagittal and frontal planes. Therefore, motion analysis systems’ output data from Vicon, Visual 3D or Cortex is 3D coordinates. Visual 3D and cortex are the professional biomechanical evaluation software to calculate movement and force data obtained by about any type of three-dimensional motion capture techniques. For instance, Cortex software has many capabilities in order to analysis C3D data. These include fully three-dimensional analysis and display with the ability to examine all angles, calculate kinetic and kinematic forces, define a segment and calculate the angles of joints, as well as the feasibility of synchronization with the electromyogram (EMG) and force plate. The Vicon motion capture system consists of a complicated hardware and software system to collect, analyze, and process biomechanics data.

Since 3D analysis is complicated and costly, some researchers prefer to use 2D motion analysis. additionally, motion capturing with multi-camera systems is required to synchronize cameras, and angles of each camera vision must be 90 ± 30◦ and, complicated calibration settings are needed and also, several events are just recorded from a two-dimensional (2D) video vision [3,4]. So 2D motion analysis is less challenging but 3D analysis is more accurate [5]. For example, Fernandez-Baena, et al. managed to measure joint angles by using the Kinect system [6]. They used this approach for clinical rehabilitation goals. The advantages it has over other motion capture systems are portable and inexpensive. In this study, Kinect devices and videogames were used as a tool for rehabilitation for patients. Despite the good benefits that this motion record system has, the restriction in motion capture capacity and low resolution indicates that using this method for clinical biomechanic purposes requires more studies.

This study aims to provide an inexpensive and accessible offline method to analyze gait based on 2D video systems and convert it to C3D data.

The 3D motion capture output is in C3D format, which includes all kinetic and kinematic data of the motion [7]. A C3D file includes positional data of markers and analog data. 3D coordinates, kinetic data from electromyogram (EMG) and force plates and personal data of the subject are all integrated into one single file [8].

According to a previous study by Eman Fares Al Mashagba, et al. [9], the process of the gate analysis has three main steps, which are: (a) motion capturing (Recording the movement by using static or dynamic cameras), (b) segmentation method (for example, placement and color of markers), (c) Analysis of data collected by the software (such as OpenSim and AnyBody). Hence in this study, we presented a new method to obtain C3D data from the 2D video.

Due to the importance of body movement modeling in biomechanics, many studies require C3D data to achieve the desired results. For example, musculoskeletal modeling software such as AnyBody or OpenSim does not work without C3D files. Systems and software programs designed with this purpose are too expensive, and much experience is required to use them. Also, to achieve optimal output, it is necessary to benefit from the help of experts who are fully aware of all the errors that may occur during the procedure. Considering the issues mentioned above, achieving kinetic and kinematic data of the body in C3D format requires a lot of time and effort. This may bring lots of difficulties in the research procedure.

On the other hand, to obtain the body points’ coordinates, some open-source software programs are free to use. These programs’ output only includes 3D coordinates of different parts of the body and the text file. This file can only be used to observe the movement. As mentioned, the C3D format is required for using this data in musculoskeletal modeling software, which is not possible with open-source software.

Several methods were presented for movement modeling until now like vision (marker or markers less) and sensor (on the body or force platforms) based [10,11]. Some of these methods are based on the postural estimation of a person [12], while others use just a camera and thus only a two-dimensional image to reconstruct a three-dimensional image based on probabilities [13]. Moreover, some methods are presented based on Direct Linear Transformation (DLT) terms [14].

When DLT became more common in the reconstruction of 3D images, another software was provided which can be used to obtain 3D coordinates of the point as output by using 2D images. An open-source software with this purpose that was developed in 2008 is Skill Spector. This software’s output is not in C3D format, and the data cannot be used in musculoskeletal modelling.

Therefore, the purpose of this research is the achievement of 3D data in C3D format with two video cameras by open-source software and finding the validity of this method.

In this study, the 3D coordinates of the points were obtained by capturing the markers by two video cameras and SkillSpector software. The angle between the two cameras for video recording was set at 90◦. SkillSpector synchronize the cameras and reconstruct 3D coordinates from 2D video data by using the direct linear transform (DLT) [4]. To apply this data, the coordinates of the points were converted to the C3D format by MATLAB. Finally, for validity, the data was compared to output of CORTEX Motion Analysis System.

Motion capture

In general, there are two basic types of systems for motion capturing (MOCAP), which are namely the online and off-line techniques [5]. The main difference between the two techniques is that in the online method, can perform tracking movements in real-time, but in the off-line method, the tracking movements and Biomechanical analysis are done by the data obtained from the video [5]. The method presented in this study is the off-line mode.

Ten healthy male subjects (age: 23.5 ± 2.2, height: 182.3 ± 2.7 cm, weight: 78.1 ± 7.8 kg) randomly participated in this test. They had to complete a full gait cycle at the optional pace. In order to find the validity of the obtained data, the Cortex system with six Raptor-E cameras (measurement accuracy of this type of camera is confirmed at [15]), which were 200 Frames per second (fps) and two video cameras (Casio) with 120 fps ran simultaneously. To obtain 3D coordinates, all the markers should be visible by two Casio cameras [16]. Therefore, video cameras (Casio) were positioned perpendicularly.

Calibration is done in each system separately in order to determine the origin of a coordinate system. For Casio video cameras calibration is done by a 1 x 1 x 1 m2 cubic object. Finally, result coordinates of the movement can be calculated in the calibrated coordinate system [17].

Five markers (d = 9.5 mm) were put on the main joints of the right lower appendicular, including toe, lateral malleolus, heel, lateral condyle, and great trochanter. These markers were seen by the Cortex system properly since six infrared cameras have recorded them. However, the heel’s marker was not detected by one of the video cameras in the Skill Spector system. The solution presented for this problem was using a marker similar to the shape shown in figure 1.

Figure 1: Heel’s marker.

In order to use this marker, the middle of it should be put on the heel. Then the coordinates of the heel are equal to the average of (A) and (B) coordinates. When converting data to C3D format in MATLAB, it was possible to enter the average coordinate of two marks instead of heel coordinates. The design of this marker was in such a way that the person could easily walk, also both A and B markers could be detected by both Casio cameras. So a 12-centimeter long stick was used for this purpose.

Data analysis and convert to C3D

The Skill Spector program has analyzed recorded videos. The analysis started from the first frame immediately after the gait cycle’s toe-off frame to recognize images of two cameras. By specifying the image of the calibration object and the location of the markers in each frame of the image and using the DLT equations, the output of the program -the 3D coordinates of the markers- was obtained in text file format [18]. For biomechanical analysis of the obtained data, mostly it is needed to be in C3D format. The output file of Skill Spector was converted to C3D by using MATLAB 8.5 software (Appendix). In addition, data including joint angles, velocity and acceleration of markers can also be achieved by using C3D file.

Validation

For validation of the output data a quantitative comparison was needed using Cortex system output as a reference data. In order to verify the outputs, the trajectory of the markers was compared in both SkillSpector and Cortex systems. Every subject had five markers and every marker had three components in x, y, and z axis. In order to provide this comparison, correlation coefficient was calculated for all 150 sets of data by using Microsoft (MS) Excel 2013 software. Data from Cortex system was three times more than the other system. To manage the fps difference between two systems before comparing the data, every three coordination in Cortex system was replaced with their average.

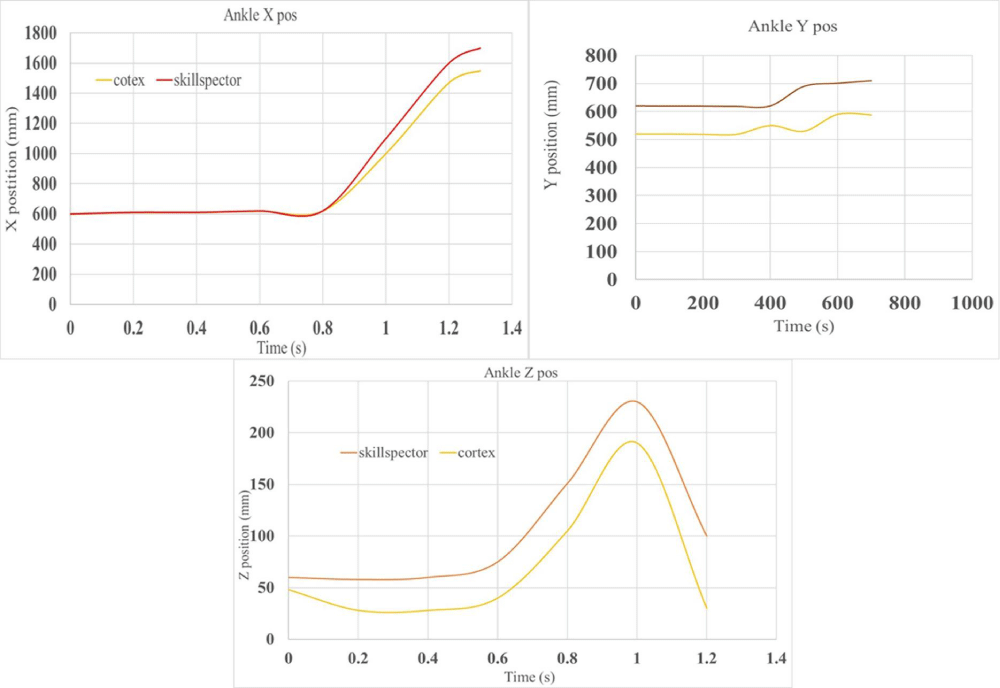

150 series of data for all 10 participants from Skill Spector and Cortex system were compared. Figure 2 shows a sample of one marker’s trajectory graphs in both systems. As shown in figure 2, despite differences between graphs of two systems, the movement patterns are noticeably similar.

Figure 2: Sample trajectory of a marker in both systems Skill Spector and Cortex.

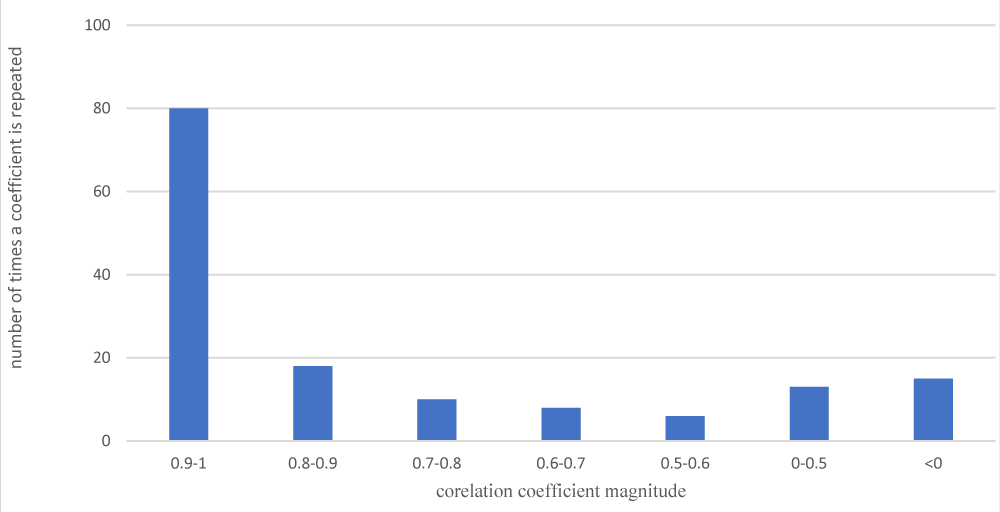

The average correlation coefficient calculated was 0.7164 for 150 series of data which shows the similarity of marker trajectories in both systems. By looking at the correlation coefficients more precisely, 80 of the calculated coefficients are close to 1 which indicating the same marker trajectories. The other 20 cases had a coefficient of 0.9 in which the graphs were very similar. In figure 3, amounts of correlation coefficients are shown separately.

Figure 3: The amount of correlation coefficients.

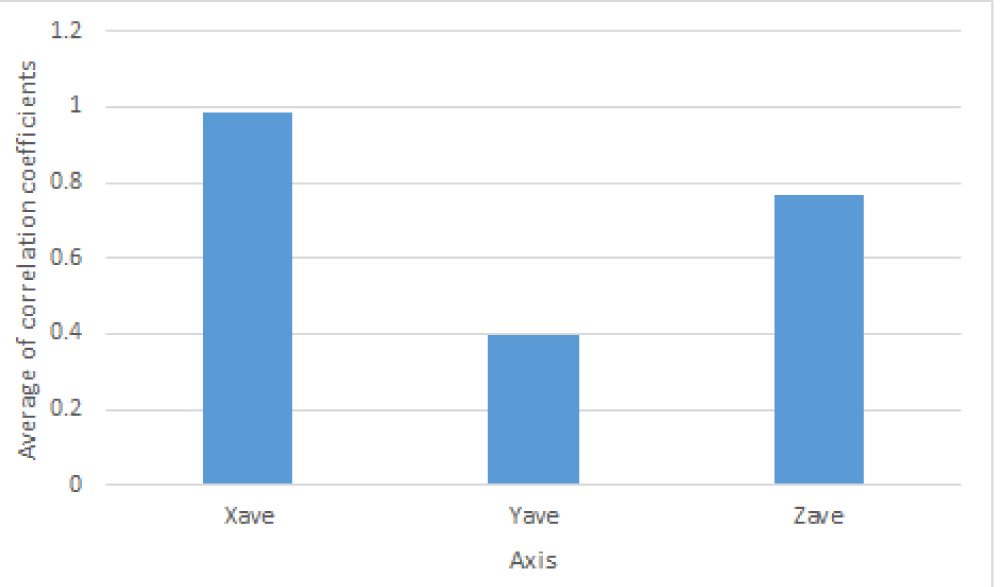

According to figure 4, it is noticeable that coefficients related to the X axis, all amounts are close to 1, and coefficients related to the Z axis, all are close to 0.9, and only Y axis coefficients had small amounts.

Figure 4: Average amount of correlation coefficients of each x, y and z axis.

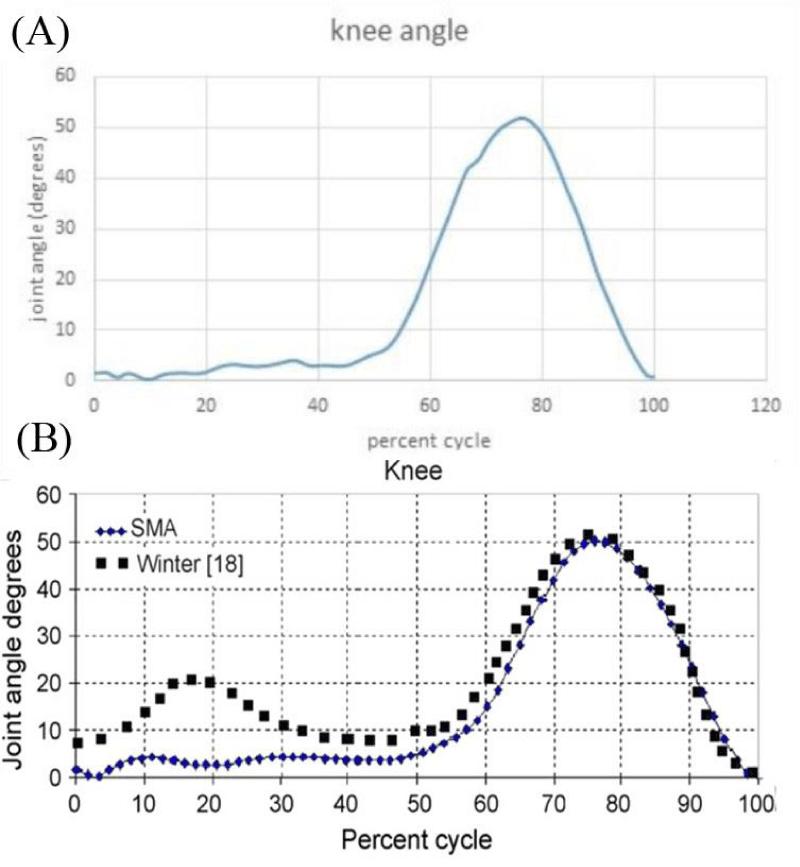

Figure 5A shows knee angle change in gait cycle which was obtained from one subject trajectory using Skill Spector system.

Figure 5: A: Knee angle data obtained from Skill Spector system. B: Knee angle data during a gait cycle according to Ref [19].

Next step after obtaining the coordinates of the markers during the movement by the method presented in this paper, the outputs were correlated with the Cortex system outcomes and from the angle of the knee joint with previous research. Graphs of marker trajectories in Cortex and Skill Spector were similar but did not entirely match (Figure 2). This inconsistency was the different motion capture frame rate in Skill Spector and Cortex; their coordinate system’s origin did not coincide. Because many correlation coefficients were approximately equal to 1 (Figure 3), only those values of Y were small (Figure 4), probably small amounts of Y axis coefficients are due to the calibration error on Y axis. By using a more rigid calibration object, the accuracy of the study is expected to increase.

In 2007, Kolahi and his colleagues at Sharif University of Technology designed a system for analyzing human movements (Sharif Motion Analyzer, SMA) [19]. In order to validate their work, Kolahi, et al. compared knee angle changes with the result of winter research in 1991 [20]. Figure 5B is the graph has shown in Kolahi’s research. Also, according to the Nordin, et al. in 2001, the range of knee angle during a gait cycle changes from 0 to 60 degrees as seen in figure 5B [21].

With respect to knee angle changes in a walking cycle, by comparing the diagrams in figure 5, A and B, it can be concluded that the Skill Spector system and the method presented in this study are valid for human movement modeling. The minor differences observed in the three graphs are due to the fact that the subject is different in each case. Also, in figure 5A, the angle range of the knee joint changes from zero to 60 degrees, which matches with the previous studies.

Another example of the related literature was that X. Gu, et al. They conducted the feasibility of the gait analysis with a single RGB camera and found that the 3D modeling of the gait analysis with a single camera without a marker is not a suitable method [22].

In this research, we try to achieve three - dimensional data with minimum facilities (2 cameras). Although reducing the number of cameras decreases the accuracy of measurement, the benefits such as accessibility in non - laboratory environments, easy to set up, and also more economical it encourages us to develop and use this method in the future.

This research’s main objective was to obtain a three-dimensional model of the subject movement in C3D format by two video cameras and validate the data. In this research, a new method is presented for tracking and modeling 3D body movement to reduce costs and consider the limitations in the lab. In this method, two video cameras, a calibration object, and some markers were used, and C3D data was obtained by open-source Skill Spector software and MATLAB program.

By comparing the results obtained with the Cortex system and comparing them to the previous research, this method can provide researchers with reliable data on human movement, including walking. This method’s advantages are its operable features in different environments and are not limited to the laboratory environment. Errors can also be minimized by improving equipment such as calibration and camera stands, which are completely stable during the test. With proper patterning of markers and positioning of the cameras and recording the movement, it can be analyzed in the Skill Spector software, and by converting the output file into the C3D format, it obtains the required kinematic information.

The MATLAB code used to convert the Skill Spector output file to C3D format using the C3Dserver library commands. (This code is valid by MATLAB after reading the input file.)

» itf=C3Dserver

toeXpos=single(toeXpos’);

toeYpos=single(toeYpos’);

toeZpos=single(toeZpos’);

LankleXpos=single(LankleXpos’);

LankleYpos=single(LankleYpos’);

LankleZpos=single(LankleZpos’);

heelXpos=single(((LheelXpos+MheelXpos)/2)’);

heelYpos=single(((LheelYpos+MheelYpos)/2)’);

heelZpos=single(((LheelZpos+MheelZpos)/2)’);

LkneeXpos=single(LkneeXpos’);

LkneeYpos=single(LkneeYpos’);

LkneeZpos=single(LkneeZpos’);

hipXpos=single(hipXpos’);

hipYpos=single(hipYpos’);

hipZpos=single(hipZpos’);

createc3d(itf,’filename’,120,136,5,0,0,1,2,0.001)

SetPointDataEx(itf,0,0,1,1000*toeXpos);

SetPointDataEx(itf,0,1,1,1000*toeYpos);

SetPointDataEx(itf,0,2,1,1000*toeZpos);

SetPointDataEx(itf,1,0,1,1000*LankleXpos);

SetPointDataEx(itf,1,1,1,1000*LankleYpos);

SetPointDataEx(itf,1,2,1,1000*LankleZpos);

SetPointDataEx(itf,2,0,1,1000*heelXpos);

SetPointDataEx(itf,2,1,1,1000*heelYpos);

SetPointDataEx(itf,2,2,1,1000*heelZpos);

SetPointDataEx(itf,3,0,1,1000*LkneeXpos);

SetPointDataEx(itf,3,1,1,1000*LkneeYpos);

SetPointDataEx(itf,3,2,1,1000*LkneeZpos);

SetPointDataEx(itf,4,0,1,1000*hipXpos);

SetPointDataEx(itf,4,1,1,1000*hipYpos);

SetPointDataEx(itf,4,2,1,1000*hipZpos);

for i=0:4

SetPointDataEx(itf,i,3,1,single(zeros(1,136)));

end

savec3d(itf);

- Leardini A, Belvedere C, Nardini F, Sancisi N, Conconi M, et al. Kinematic models of lower limb joints for musculo-skeletal modelling and optimization in gait analysis. J Biomechanics. 2017; 62: 77-86. PubMed: https://pubmed.ncbi.nlm.nih.gov/28601242/

- M W. Gait Analysis an introduction. 2007.

- Bartlett R. Introduction to sports biomechanics: Analysing human movement patterns: Routledge; 2014.

- Bjering B. Estimations of 3D velocities from a single camera view in ice hockey. 2019.

- Castro JG, Medina-Carnicer R, Galisteo AM. Design and evaluation of a new three-dimensional motion capture system based on video. Gait Posture. 2006; 24: 126-129. PubMed: https://pubmed.ncbi.nlm.nih.gov/16168656/

- Fern'ndez-Baena A, Susín A, Lligadas X, editors. Biomechanical validation of upper-body and lower-body joint movements of kinect motion capture data for rehabilitation treatments. 2012 fourth international conference on intelligent networking and collaborative systems. IEEE. 2012.

- c3d.org. 2016. https://www.c3d.org/c3ddocs.html/

- System CDFFUMbML. 2002 [Internet]. 2016. https://www.c3d.org/pdf/c3dformat\_ug.pdf

- Almashagbah EFM. Simple and efficient marker-based approach in human gait analysis using Gaussian mixture model. 2014.

- Wang L, Hu W, Tan T. Recent developments in human motion analysis. Pattern Recognition. 2003; 36: 585-601.

- Prakash C, Kumar R, Mittal N. Recent developments in human gait research: parameters, approaches, applications, machine learning techniques, datasets and challenges. Artificial Intelligence Review. 2018; 49: 1-40.

- Sigelman B. Video-Based Tracking of 3D Human Motion Using Multiple Cameras: Brown University Providence, Rhode Island; 2003.

- Adeli-Mosabbeb E, Fathy M, Zargari F. Model-based human gait tracking, 3D reconstruction and recognition in uncalibrated monocular video. Imaging Sci J. 2012; 60: 9-28.

- Abdel-Aziz Y, Karara H, Hauck M. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogrammetric Engineering Remote Sensing. 2015; 81: 103-107.

- Miller E, Kaufman K, Kingsbury T, Wolf E, Wilken J, et al. Mechanical testing for three-dimensional motion analysis reliability. Gait Posture. 2016; 50: 116-119. PubMed: https://pubmed.ncbi.nlm.nih.gov/27592076/

- Payton CJ, Burden A. Biomechanical evaluation of movement in sport and exercise: the British Association of Sport and Exercise Sciences guide: Routledge. 2017.

- Chen L, Armstrong CW, Raftopoulos DD. An investigation on the accuracy of three-dimensional space reconstruction using the direct linear transformation technique. J Biomechanics. 1994; 27: 493-500. PubMed: https://pubmed.ncbi.nlm.nih.gov/8188729/

- video4coach.com. http://video4coach.com/index.php.

- Kolahi A, Hoviattalab M, Rezaeian T, Alizadeh M, Bostan M, Mokhtarzadeh H. Design of a marker-based human motion tracking system. Biomedical Signal Processing and Control. 2007; 2: 59-67.

- Winter DA. Biomechanics and motor control of human gait: normal, elderly and pathological1991.

- Nordin M, Frankel VH. Basic biomechanics of the musculoskeletal system: Lippincott Williams & Wilkins; 2001.

- Gu X, Deligianni F, Lo B, Chen W, Yang G-Z, editors. Markerless gait analysis based on a single RGB camera. 2018 IEEE 15th International Conference on Wearable and Implantable Body Sensor Networks (BSN). IEEE. 2018.